Like any new tool, Ensign takes a little time to learn. But take it from us (a bunch of seasoned data science experts) – it will open up a new world of data science applications for you. Check out this beginner-friendly introduction!

Ensign is a flexible database and data streaming engine built for data scientists by data scientists. We make it easy for data teams to build and deploy real-time data products and services that make an impact, no matter your use case.

To help you on your learning journey, we’ve established Ensign U — a curriculum designed to get your first project up and running.

First, the Pre-Reqs

If you’re new to real-time data streaming, pubsub and event-driven architecture, here are a few recommended resources:

- Blog: Introduction to streaming for data scientists (20 mins)

- Blog: From batch to online/stream (10 mins)

- Tutorial: Pub/Sub for Newbies tutorial (1 hr) — skip around as needed

Make sure you have Python installed. We recommend using Python >= 3.9.

Step 1. Start with a Use Case

Start with a use case in mind. This can be any type of streaming data project that you’re interested in and your primary goal is to bring meaning to the data.

Looking for more inspiration?

Step 2. Pick Your Streaming Dataset(s)

Where is your data coming from? A data source can be a database, a public data API, a running web application, a bunch of IoT sensors, a file system, system logs, or other upstream sources.

Check out our Data Playground for publicly available APIs that stream data.

Step 3. Sketch Out Your Data Flow Architecture

Most data scientists want to start by training the machine learning model, since that’s how we’re trained. But this can lead you to a trained model that can’t fit into an application without a lot of painful MLOps.

In our experience, data science projects are much easier to deploy if you map out your data flows first, and then start modeling.

Watch these short videos:

Step 4. Set Up Your Project Infrastructure

Here’s where you need to create an account and log into Ensign. These videos will help.

- Create a project: A project is a collection of topics and will serve as your data repository for streaming events.

- Name your topics: A topic is a log of events that contain information you’re interested in.

- Generate API keys: Use your API keys to connect your data source to your topic.

- Connect using your API keys: Establish a client connection to the Ensign server by passing in the ClientSecret and ClientID from your API keys, either via environment variables or a gitignored file inside the repo.

Step 5. Install PyEnsign

Install the pyensign sdk. We recommend using Python >= 3.9.

You can pip install pyensign with:

pip install pyensign

Or, if you have an older version of pyensign installed (especially before v0.11b0), make sure you are on the latest release by upgrading your pyensign version using:

pip install --upgrade pyensign

Check out the docs if needed!

Step 6. Run the Minimal PyEnsign Example

Check that your API keys and your pyensign installation are working. You can do this by running the minimal python example.

Step 7. Run the Earthquake Data Ingestion Example Code

Run the earthquake example to ingest data into your topic using your own API keys.

Step 8. Query Your Ingested Data

Once you get data into your topic, you can view the data by logging into your Ensign account and navigating to Projects > Project Name > Topic Name. You will see the details page for your topic, and you can run the sample Topic Query provided to view a few of the events you have successfully ingested.

You can also query your data using EnSQL — see an example of how to do that with PyEnsign and your earthquake data here.

Congrats!

Now you have data flowing into your topic. The world is your oyster!

Use this same process each time you have a new data science project, and your chances of getting it deployed will be that much greater!

Here are some common data science projects that can add value to an organization:

- Working with sensitive data? Apply transformations that mask or anonymize data from your data source.

- Create real-time machine learning models that learn on the go.

- Publish streams to web apps, mobile apps, dashboards, an

- Publish data from different topics into a new a new topic

- Collect data from edge devices and sensors.

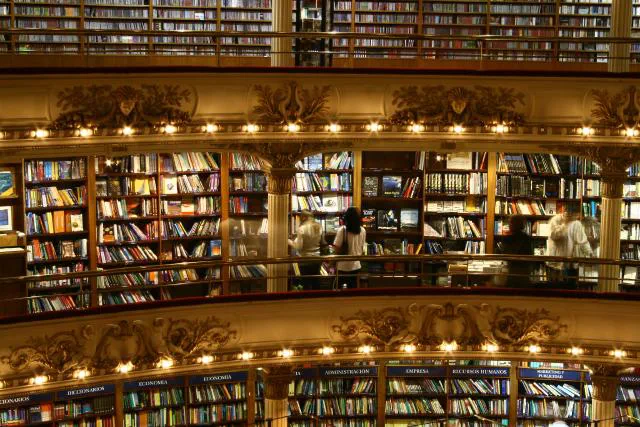

Photo by Pablo Dodda on Flickr Commons