Tools: How AI Agents Get Real Work Done

Now that we’ve covered AI agents in general, and prompts and LLMs specifically, let’s move on to tools.

Recall our definition: an AI agent is a system designed to achieve a specific objective using prompts, LLMs, tools, and guardrails in a defined environment. Of these components, tools have become increasingly important because they’re what allow AI agents to deliver real business impact.

Terminology Tip: Tool calling is also called function calling. Both refer to ways for LLMs to work with external systems and access data outside their training data.

What Are Tools? Why Do Agents Need Them?

Tools are how agents reach beyond their training to get things done. Without tools, agents can only talk; with tools, they can work. There are three reasons tools matter:

Context Enrichment

LLMs are trained on large general datasets like Common Crawl. They don’t know your business. Without this knowledge, they will hallucinate. Tools such as Retrieval-Augmented Generation (RAG) systems allow you to connect agents to your private databases so that they are grounded in your data and context.Dynamic Intelligence

LLMs are static: once trained and released, their parameters (or weights) don’t change. But your business is dynamic - it moves fast. New information arises daily that isn’t in the training set. Tools like web search or RAG let agents pull in fresh data, keeping them current.Proprietary Know-how

LLMs can summarize, answer questions, or search text, but they don’t know how to format slides, generate branded PDFs, or follow workflows unique to your company. By creating custom tools, you give agents proprietary capabilities that differentiate your business and drive value.

A Note on Context Engineering

Context engineering is a critical component of agentic systems. The quality of information you provide an agent largely determines the quality of its output. Context can come from prompts, short-term “memory”, databases, or tools like search and RAG.

We’ll save a deep dive on context engineering for a later post. For now, just note that tools are one of its most important enablers.

Tool Types

You can equip AI agents with different tools depending on their mission. The most relevant today include:

- Web Search: Agents fetch the latest information from the internet.

- File / Data Search (RAG): Agents retrieve insights from internal knowledge bases, databases, or warehouses.

- Document Parsers: Agents extract and interpret structured or formatted documents.

- Business Systems: Agents connect into CRM, ERP, HR, or finance systems to automate workflows.

- Productivity Tools: Agents interact with email, calendars, and collaboration apps to save time across teams.

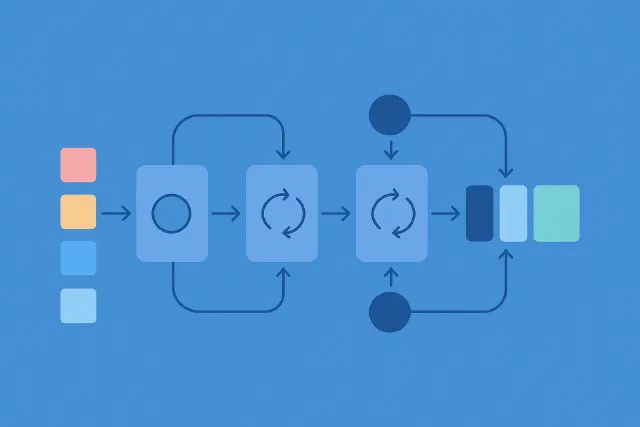

Connecting LLMs to Tools

LLMs don’t automatically know how to use tools. Typically, companies have developed custom APIs to connect LLMs to tools. More recently, engineers have built protocols to accelerate adoption and reduce friction:

- Model Context Protocol (MCP): An open-source standard (from Anthropic) that defines how an LLM can securely call a tool or service.

- Agent-to-Agent (A2A): An open protocol by Google that lets agents “talk” to each other.

- LangChain (and similar frameworks): Provide developer-friendly abstractions for defining and managing tool use.

Tools Come With Risks

While tools unlock capabilities, they also expand your risk surface. The main risks include:

- Security: A web search tool might pull in malicious data or code.

- Dependencies: If a tool or API goes down, or access is revoked, your agent may fail.

- Reliability: Misconfigured function calls can cause agents to behave unpredictably.

- Compliance: Tools that touch user data or PII can create privacy and regulatory exposure.

- Autonomy / Control Without guardrails, it is possible for agents to make errors that can be catastrophic to your business.

Example: Runaway Agent / Infinite Search

An agent keeps calling a tool in a loop, never converging on an answer. This wastes compute, racks up costs, and can overwhelm systems.

Guardrails, governance, and system design help manage and mitigate these risks. Guardrails are the topic of the next post.

The Key Take-away

Tools are becoming the key enabler of business-ready AI agents. They extend LLMs beyond static training to operate with your data, in your workflows, and for your outcomes. But they also introduce risks that must be managed through governance and guardrails.

Up next: Guardrails - How to keep agents powerful, but safe.

In the meantime, contact us if you’re interested in exploring an affordable proof-of-concept for your organization or a demo of Endeavor, our collaborative AI agent management platform for creating trusted, business-aligned agents that deliver real results.

Image generated using GPT-5